6 min read

From Authority to Orchestration: Decision-Making in the DTO Era

Digital Twins of the Organization (DTOs) are reshaping more than operations — they're rewiring the very systems and structures of organizations. By...

5 min read

.png) Mimi Brooks

:

9/17/25 2:15 PM

Mimi Brooks

:

9/17/25 2:15 PM

“Sensemaking is more than an act of analysis; it’s an act of creativity.”

—Deborah Ancona & Peter Senge

Sensemaking has long been considered one of the most essential capabilities of leaders and teams. Karl Weick described it as the human process of interpreting complex, shifting environments by “making the strange familiar, and the familiar strange.”

In today’s AI-enabled organizations, sensemaking is changing shape. The challenge is no longer about whether leaders alone can “make sense” of volatile environments. It’s about how leaders orchestrate human + machine sensemaking systems by curating, questioning, and integrating insights generated by algorithms, digital twins, and always-on data flows.

AI doesn’t remove the need for sensemaking. It multiplies it. Leaders now face a new kind of work: meta-sensemaking. Instead of interpreting the environment directly, they seek to understand how the AI interprets the environment. They must ask:

| Tool | Definition |

| Model Auditing | The structured, critical evaluation of AI models to ensure they are safe, fair, explainable, accountable, robust, and fit for purpose. It’s a cornerstone of responsible AI. |

| Data Provenance Tracking | The process of recording and tracing the origin, history, and transformation of data throughout its lifecycle to ensure transparency, accountability, and reproducibility in AI. |

| Causal Interference Tools | These are the methods and frameworks used to identify, estimate, and validate cause-and-effect relationships from data, helping distinguish true causal impacts from mere correlations. |

| Feature Attribution | This refers to techniques that determine how much each input feature contributes to a model's prediction, helping to explain and interpret the model’s decision-making process. |

| Model Sensitivity Analysis | The process of testing how changes in input features affect a model’s outputs, helping to assess the model’s stability, robustness, and reliance on specific variables. |

| Counterfactual Explanations | These show how a model’s output would change if certain input features were altered, helping users understand what minimal changes would lead to a different decision or outcome. |

| User-in-the-Loop Testing | Involves incorporating real users into the evaluation and refinement of AI systems to ensure the model's behavior aligns with human expectations, usability needs, and contextual understanding. |

| Fairness Metrics | Quantitative measures used to assess whether an AI model treats different groups (e.g., by race, gender, or age) equitably, helping to detect and mitigate bias in model predictions. |

The risk isn’t that AI takes over sensemaking. It’s that leaders will outsource meaning to the machine without applying human judgment.

Historically, sensemaking has been about plausible narratives. Leaders weave scattered signals into stories people can understand and act upon.

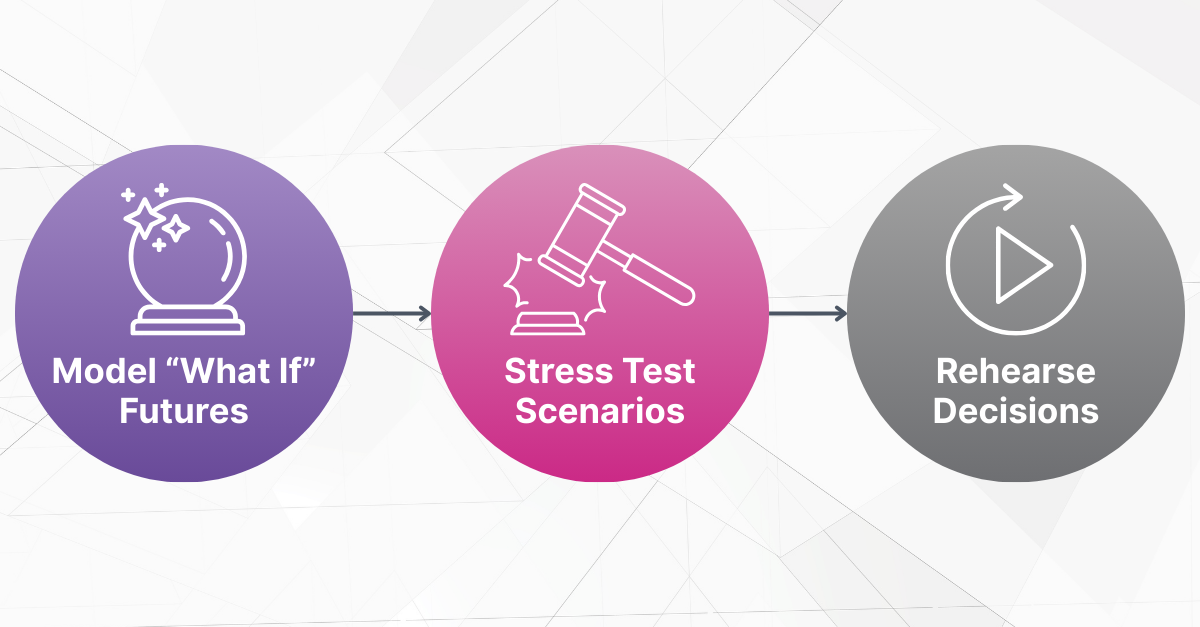

In the digital twin era, this expands into prospective sensemaking. Leaders don’t just tell stories, they simulate them. With DTOs, leaders can model “what if” futures, test them under stress, and rehearse decisions before making them.

The crucial point is that narratives and human context must come first. Narratives reveal lived experience, tacit knowledge, and anomalies that don’t show up in data exhaust. AI and DTOs can then extend those narratives by clustering, simulating, and scaling them into multiple futures. Without this grounding, leaders risk treating algorithmic outputs as objective “truth” rather than as one perspective.

While stories are still how people make meaning, simulations now make those stories testable and adaptable.

Credibility has always multiplied the impact of leadership action. In the AI era, credibility extends beyond the leader, as employees, customers, and regulators now ask: Can we trust the systems you deploy?

Leaders must become the stewards of system credibility by becoming transparent about data lineage and model bias, accountable for ethical use and privacy, and ready to explain failure modes before they happen.

A leader can be personally credible yet lose legitimacy if the systems they sponsor are opaque or unfair. In the AI playbook, credibility must apply equally to leaders and the intelligent systems they rely on.

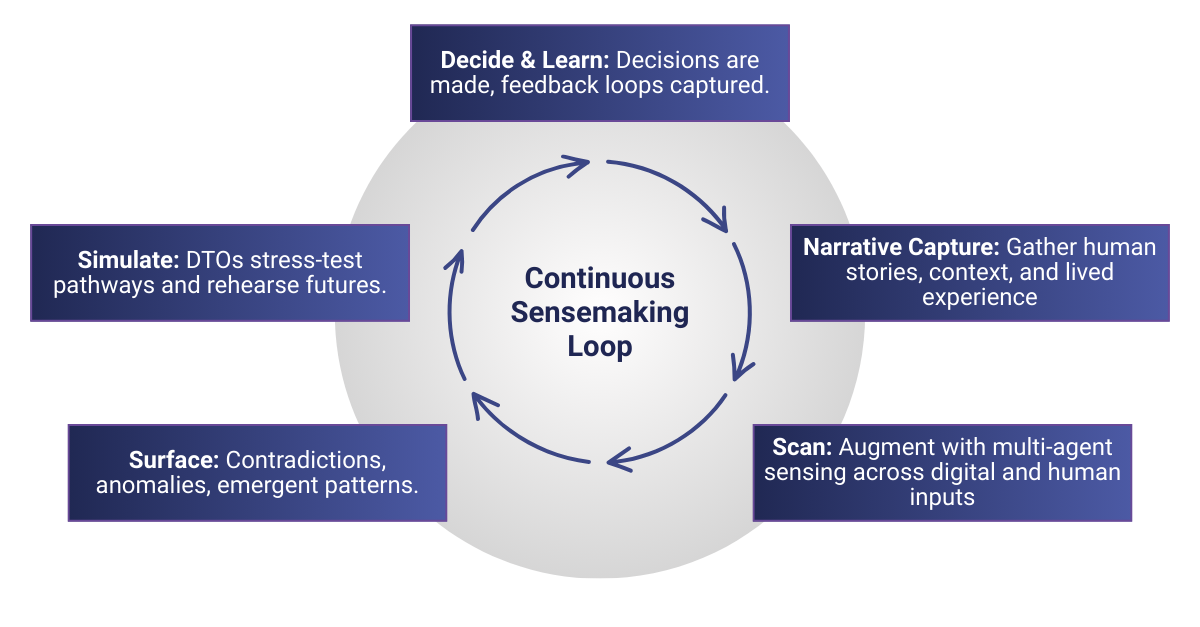

Sensemaking has also been a collective endeavor. Boundary-spanning, externally oriented teams have always been stronger at it. In the AI era, teams must go further by becoming Hybrid X-Teams, where human and machine agents collaborate, AI agents scan and cluster weak signals, humans probe contradictions and frame meaning, and digital twins test and coordinate futures. In this manner, leadership shifts from “assembling the right people” to assembling the right people + the right machines in a dynamic configuration.

The table below outlines six key differences between traditional and AI-era sensemaking, highlighting how leadership, credibility, narrative, and data work are evolving to meet the demands of the digital twin and artificial intelligence era.

| Traditional Sensemaking | AI-Era Sensemaking |

| Human-only interpretation | Meta-sensemaking: Leaders curate and audit AI insights |

| Episodic scans of environment | Continuous, multi-agent sensing across platforms, humans, and data streams |

| Narrative as end point | Narrative-first + simulation: Human context, then DTOs/AI futures |

| Credibility = leader’s trust + competence | System credibility: Trust must extend to AI systems and models |

| Inward, bounded teams | Hybrid X-Teams: Humans + AI agents collaborating, boundary-spanning |

| Data patterns dominate | Narrative + data hygiene: Capture stories and context before patterning |

In the AI playbook, sensemaking looks less like a one-time event and more like a continuous loop:

This loop turns sensemaking into an organizational system capability, not just a personal trait of leaders.

Traditional notions of sensemaking are incomplete. It is no longer just a human capability. It is an AI-augmented leadership system, and leaders who ignore AI in sensemaking risk irrelevance. Also, those who over-rely on AI risk hollow credibility, but those who integrate narrative-first inputs with machine-driven simulation will set the pace for the intelligent enterprise.

Sensemaking has always been the “first move” of leadership. In the AI playbook, it is also the most human move. Because while machines can process signals and simulate futures, only humans can ask: Which future is worth pursuing, and why?

That is the new work of sensemaking. And it is the essential discipline of leaders building the organizations of tomorrow.

This is the second article in a DTO series. Read additional articles in this series:

.png)

6 min read

Digital Twins of the Organization (DTOs) are reshaping more than operations — they're rewiring the very systems and structures of organizations. By...

7 min read

Navigating Acceleration in an Age of Transformation A Bold Agendas Perspective by Mimi Brooks Executive Summary 2025 revealed no fewer than eight...

.png)

7 min read

To survive and flourish, leaders will need an agile mindset to systematically innovate, so that their organizations can harness the full potential of...